Video Depth Maps, Zed and NVDA

03 Feb 2017My latest experiment has been to generate video depth maps with the goal of splicing in 3D content.

It all starts with a stereo video:

and after some math, becomes a left and depth video:

The whiteness of each pixel in the video to the right dictates how far away that object is from the camera. A completely white pixel is 2 feet away and a gray pixel could be say 10 feet away.

Here are a few others:

How is this magic possible?

I had a lot of help from the Zed stereo camera:

They are using Nvidia’s CUDA to run GPGPU algorithms against the stereo images to calculate the depth of each pixel.

Not Always Perfect

As you can see in the video above, distance dramatically affects depth perception. The horizon is obviously not the closest thing to the camera.

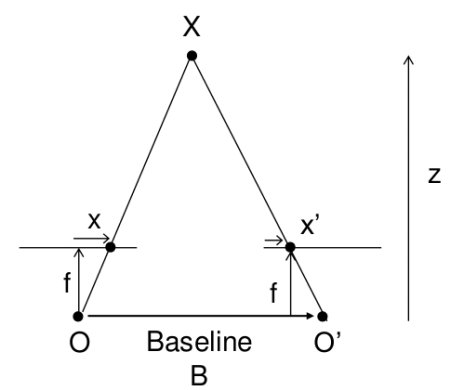

The farther away the two lenses are from each other, the farther away it can perceive depth. Inversely, the narrower the baseline, the closer it can perceive depth. This distance is called the baseline. The iPhone 7 Plus can only do portrait mode eight feet away because of the baseline length between its dual cameras on such a small form factor.

Read more about it generating depth maps from stereo images here at OpenCV.

Read more about it generating depth maps from stereo images here at OpenCV.

Future

This is all extremely relevant in the realm of autonomous vehicles. And that space is on fire. Want to know how far a rock is from a car? Generate a depth map in real time. And that’s exactly what NVidia’s graphics cards allow you to do. They excel at simple calculations run in parallel across datasets, which is exactly what neural networks in machine learning do. This general purpose usage of the GPU (GPGPU) has led to video cards being snatched up by Deep Learning data centers to get an edge in AI.

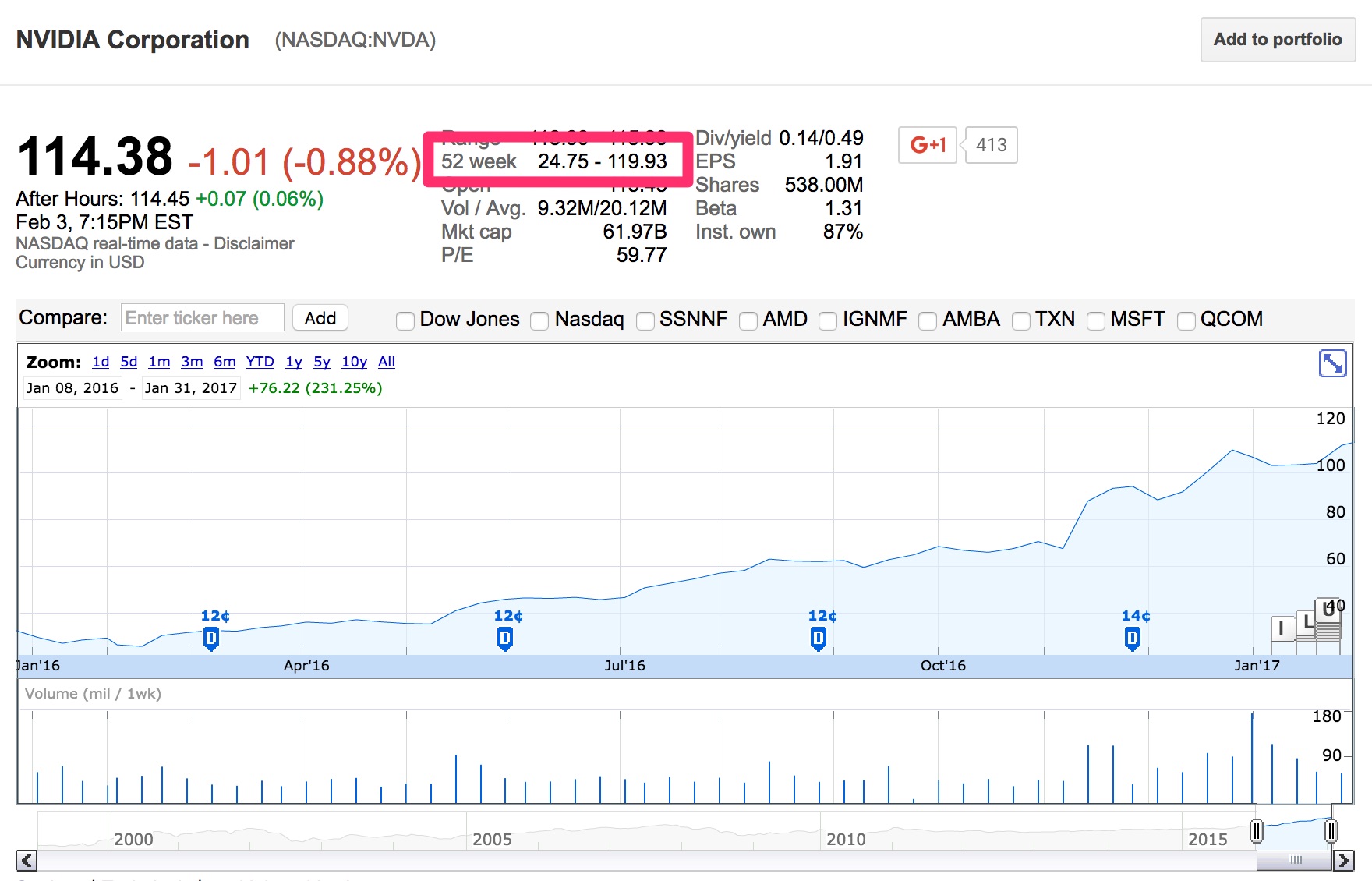

The market seems excited by it, take a look at nVidia’s stock price the last year: